Artificial intelligence (AI) has become a symbol of hope in today’s generation — a powerful ally in the global fight against climate change and the reduction of human-induced emissions. From transforming waste management and revolutionizing agriculture to advancing the circular economy, AI is driving innovative solutions for a more sustainable future. Yet, this rapid progress comes with a challenge of its own: the immense energy demand required to power AI systems. Balancing this technological growth with environmental responsibility is now one of the defining challenges — and opportunities — of our time. This article highlights forward-thinking solutions for powering AI sustainably, focusing on innovation and long-term environmental responsibility.

The Energy Footprint of AI

Data centers are specialized facilities that house critical IT infrastructure, data storage systems, and computing technologies essential for modern businesses and digital operations. These centers serve as the backbone of AI, providing the massive computational power and storage capacity required to train and run AI models. AI training, which involves running billions of complex calculations to teach large models; inference, where trained models make predictions in real time; and data processing and storage, which handle vast datasets used to improve AI accuracy. In addition, cooling systems are required to maintain optimal operating temperatures for servers, further increasing power consumption. Together, these components create a continuous and intensive energy load, making AI one of the most power-hungry technologies in the digital ecosystem.

In 2024, global data centers consumed an estimated 415 terawatt-hours (TWh) of electricity—around 1.5% of total global electricity demand—as they powered the digital infrastructure behind modern life. However, with the rapid rise of AI, these energy needs are set to grow dramatically. Recent research projects that by 2027, AI-related data center power requirements alone could reach approximately 68 gigawatts (GW)—a figure that nearly doubles the total global data center power capacity recorded in 2022. This projected surge highlights the immense energy appetite of AI systems and underscores the urgent need to transition toward clean, renewable power sources to support sustainable digital growth.

Transitioning to Clean Energy for AI Systems

As the demand for AI grows, so does its reliance on vast amounts of electricity—most of which still comes from fossil fuels. To ensure that AI development aligns with global sustainability goals, a significant shift toward clean and renewable energy sources is crucial. This transition involves powering data centers and AI infrastructure with energy from solar, wind, hydroelectric, and geothermal sources rather than conventional coal or natural gas.

Energy Circularity

Energy circularity refers to designing systems that maximize the reuse and recycling of resources while minimizing waste. In the context of AI, implementing energy circularity involves creating closed-loop systems and optimizing energy utilization to achieve higher efficiency. One innovative approach in this domain is carbon-aware computing, which schedules computational tasks—particularly non-urgent ones—at times and locations where renewable, carbon-free energy is available on the grid. By aligning AI workloads with periods of abundant clean energy, this approach reduces unnecessary energy consumption and lowers carbon emissions, contributing to a more sustainable and circular AI infrastructure.

Google, one of the largest technology organizations and a key contributor to AI research, has developed a carbon-aware computing system designed to monitor grid carbon intensity. By leveraging predictive analytics and estimation techniques, the system intelligently reschedules computational tasks and limits workloads during periods of high energy consumption. This approach has enabled Google to align its operations with cleaner energy availability, resulting in a reduced carbon footprint and more sustainable energy usage across its data centers.

Another example of an organization leveraging circular energy to power AI involves an innovative underwater approach. Microsoft’s Project Natick constructed data centers underwater to naturally cool the processing systems using the ocean’s constant temperature. This represents a pure form of circular energy, where continuous ocean cooling maintains optimal server temperatures without additional energy input, while the data center operates entirely on renewable electricity. Launched in 2018, the project utilized solar and wind energy from nearby grids and featured a modular, portable design, making it ideal for relocation and deployment in areas with access to clean energy.

Cost Effective

Beyond circular energy approaches, cost optimization plays a vital role in sustaining clean AI operations. Demand-response strategies enable data centers and AI systems to adjust their energy consumption based on demand, pricing, or grid conditions. By shifting non-urgent tasks to periods of lower-cost or cleaner electricity, energy use becomes more efficient, reducing both expenses and emissions. These principles can also extend beyond corporations—encouraging individuals and smaller organizations to adopt smarter, more energy-conscious digital habits that collectively support a greener, more sustainable AI ecosystem.

Domain Specific Strategy

Traditionally, data systems were designed to handle analytics processes from the lowest to the highest levels of complexity. These systems were built with awareness of worst-case scenarios, ensuring they could manage tasks that required substantial computational power and energy. However, with advancements in technology, domain-specific systems have emerged. These systems are optimized for particular tasks, leveraging predefined estimations and parameters programmed by developers. As a result, domain-specific systems can execute operations more efficiently, allowing for faster task completion and better resource utilization. Researchers can develop domain-specific AI models that are customized for particular fields, such as computational chemistry or healthcare, reducing the computational overhead.

How circular design principles can make AI infrastructure greener

Sustainable and Energy-Efficient Hardware Design

Using neuromorphic and optical chip designs can significantly reduce the energy consumption of AI systems. Neuromorphic chips are modeled after the structure of the human brain, where artificial neurons activate only in response to specific signals rather than running continuously like traditional processors. This approach, known as event-driven computation, enables substantial energy savings by ensuring power is used only when necessary. Such designs are increasingly being adopted in AI research and development, with examples like IBM’s TrueNorth chip, which demonstrates how brain-inspired architectures can deliver high computational efficiency while dramatically lowering energy demands.

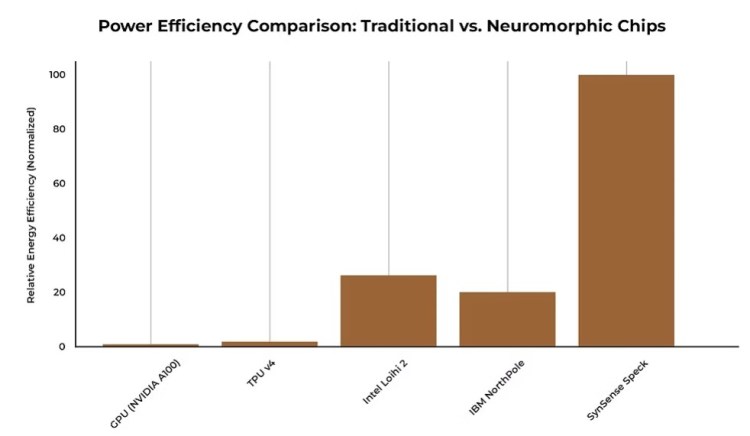

This graph illustrates how neuromorphic chips such as Intel’s Loihi 2, IBM’s NorthPole, and SynSense Speck demonstrate significant advancements in energy efficiency compared to traditional AI processors like the NVIDIA A100 GPU and Google’s TPU v4.

Because neuromorphic chips perform task-specific, event-driven computations rather than continuous processing, they generate significantly less heat during operation. This reduced heat output, in turn, lowers the cooling demand in data centers—one of the largest contributors to total energy use. Lower cooling needs also result in reduced water consumption for liquid cooling systems, as well as a smaller overall environmental footprint. This produces a more sustainable and resource-efficient AI infrastructure.

Lifecycle Management and Circular Design

While transitioning to clean energy is vital for reducing the operational footprint of AI systems, achieving a truly circular and sustainable AI ecosystem also requires addressing how hardware is designed, used, and eventually retired. This is where lifecycle management and circular design come into play.

After developing energy-efficient hardware, it is equally important to manage the lifecycle of AI systems responsibly. This includes sourcing materials from sustainable suppliers, designing components for longevity, and ensuring they can be easily repaired or upgraded rather than replaced. Such practices extend the operational lifespan of AI technologies, reduce electronic waste, and promote a more resource-efficient ecosystem.

Electronic waste now accounts for around 70% of the world’s total waste stream, according to a 2025 report. This growing challenge underscores the urgent need for closed-loop systems—a key principle of the circular economy. By recycling and repurposing materials from discarded electronics, valuable components can re-enter production cycles, minimizing pollution, conserving resources, and strengthening the sustainability of AI infrastructure.

Make AI Greener: What You Can Do

While large corporations drive AI development, individuals also play a crucial role in promoting sustainable AI. Simple actions—like streaming videos at lower resolutions, browsing websites efficiently, and reducing screen brightness—can make a meaningful difference.

Choosing energy-efficient browsers such as Ecosia, which runs on renewable energy and offsets carbon emissions, instead of conventional options like Google Chrome, helps reduce the indirect energy required by AI-driven data processing. This is especially important because Ecosia partners with various non-profit organizations and donates around 80% of its profits to fight deforestation and support reforestation projects. This, in turn, contributes to a wider circular framework in the economy.

By choosing digital tools thoughtfully and adopting sustainable storage habits, individuals can significantly contribute to reducing the power and energy demands of AI‑driven systems. For example, storing only essential files and selecting cloud services powered by renewable energy helps minimize the load on data‑centres and their associated energy consumption. Additionally, practising digital decluttering — such as deleting unused files, duplicates, and outdated backups — has a measurable impact. Research indicates that reducing data hoarding can lower energy consumption and carbon emissions associated with cloud storage. By combining these behaviours with mindful device usage, individuals become active participants in a circular economy—supporting sustainable power, reducing waste, and supporting a greener, lower‑energy AI ecosystem.

Towards Sustainable AI

Data centers—the backbone of AI—consume immense amounts of electricity, and the increasing computational demands of AI models risk exacerbating global energy pressures. At the same time, advancements in clean energy integration, domain-specific systems, and energy-efficient hardware such as neuromorphic chips demonstrate that AI can be designed and operated sustainably.

Circular design principles—such as lifecycle management and modular, recyclable components—help AI infrastructure minimize waste and resource use. Yet, their deployment requires careful oversight to avoid ethical, operational, or indirect environmental risks. Simple daily actions, like using energy-efficient browsers, lowering video resolution, and decluttering digital storage, can collectively reduce AI’s energy footprint. By combining technological innovation with mindful digital habits, society can leverage AI responsibly while supporting long-term environmental sustainability.